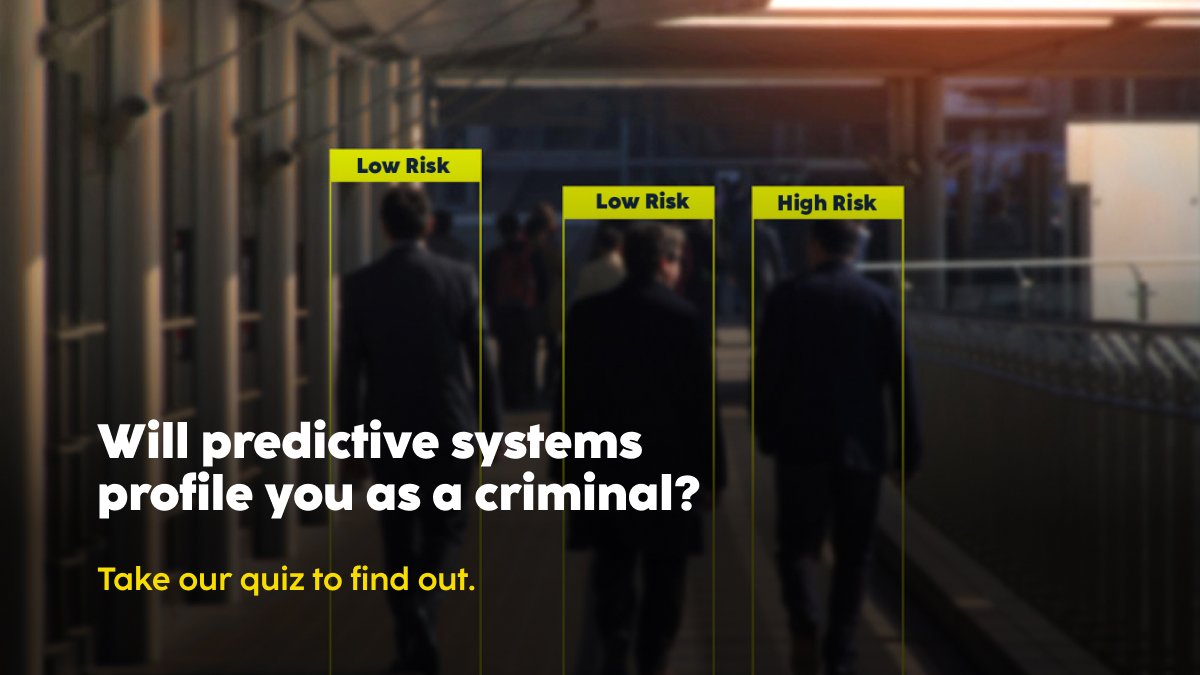

Will predictive systems profile you as a criminal?

Police forces and criminal justice authorities across Europe are using data, algorithms and artificial intelligence (AI) to ‘predict’ if certain people are at ‘risk’ of committing crime or likely to commit crimes in future, and whether and where crime will occur in certain areas in future.We at Fair Trials are calling for a ban on ‘predictive’ policing and justice systems. Take the quiz below to see if you’d be profiled or seen as a ‘risk’ – and find out how to support our campaign.

Source: Predictive Policing – Fair Trials

If you’ve been watching as so many murders and acts of terrorism are being committed by people who broadcast their intentions and motives to the world, then like me, for you, this is a big head scratcher.

You know if they’re doing this in Europe now, they’ve probably been doing it in the U.S. for awhile, and yet…

I think though, that if there were scores of potential violent criminals and terrorists being caught before they do serious harm, the authorities would be bragging on this nonstop. We would hear every day about what a wonderful job the police are doing instead of the literal thousands of lives lost at the hands of murderers in uniform.

Something just doesn’t add up about this. It looks very much like an excuse to target activists. Worse, it could be an excuse to do “early intervention” on kids who show critical/independent thinking abilities, to label them as antisocial. Even darker, it could be used to target LGBTQ+ people in places where the laws are discriminatory.

Reposts

Likes